In the past two weeks we got to talk about some sorting algorithms and the efficiency on them. We learned how to express the time complexity of algorithms, the upper bound and lower bound, big Oh and big Teta. I will try to talk about the sorting algorithms and their efficiency a little bit.

Sorting algorithms are made to sort some kind of information and put them on a certain order, like sorting a random list into a decreasing order, but can be used to extract precise information from big databases. Sorting has always been a necessity of the humanity, for information itself, sorting is very useful, and thanks to that, sort has always attracted a lot of research in computer science, sorting are common used on everyday tasks, like sorting products by smaller price, sorting by name, location, and so on. But the ones that we looked on class are the listing sort algorithms, like Quick Sort, Merge Sort, Count Sort and Select Sort.

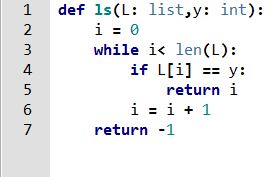

As a mean to calculate efficiency of sorting algorithms you have to analyse it for a list of size n (aleatory natural number), and check how many steps would take to complete the code, for example, in this code:

This code finds a number on a list

For this simple code we can see that the while loop will be repeated the size of the list times ( i will call it n) if the element y is not on the list, this would be the worst case, so for a tight bound on big Oh worst case we would have line #1 executed 1 time, line #3 to line #6 executed 3n times(1 for the condition check on line #3 at each loop until it fails, 1 time for the if in each loop and 1 time to the i assignment for each loop),and 1 time for the condition fail on the while loop on line 3, and line #7 executed one time to return a failure. That would give us a O(3n+3), the most important information on this notation is the 3n, because it will show us how the algorithm runtime changes with the size n list.

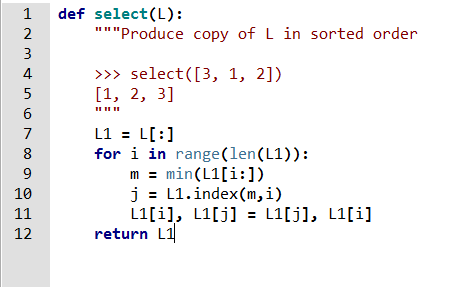

Let’s do on a sorting algorithm now:

Line # 7 : it will run 1 time

Line #8: n times (n = size of list)

Line #9: it’s calling a function that looks for the min of the list, it has to go trough all the list, so it will be n times( this function could be replaced by a for loop)

Line #10: it will run 1 time, just to give the index of the smaller item on the list

Line #11: 1 time each loop to trade the i item at the list with the j item at the list ( the smaller one found before)

Line #12 : 1 time

In the end, we can have for Selection sort O(1 + n(n+2) +1) that will be O(n^2 + 2n + 2), so we can say that it has a O(n^2) complexity. In selection sort doesn’t matter if the list is already sorted or if the list is random or if is sorted in the contrary order, it will always perform on a quadratic complexity O(n^2). Did you understand everything so far?

Most of the algorithms could assume different runtime variations with a list of n size, it could be quadratic, like Quick Sort, Logarithmic, like Merge Sort, linear, cubic, exponential (2^n) or even the worst case (n^n). But it will always depend on the input list, if its already sorted, or if is totally random, or if is on decreasing order, or increasing order. Each sorting algorithm runs differently on each type of input. In the last lab we did a comparative on the runtime of the sorting codes on Phyton, excluding the Phyton built in sort, the most effective on random lists was the Quick sort, on sorted lists was the Merge Sort and on reversed sorted lists was the Merge Sort again.

So you must be asking yourself, what’s the difference on the algorithms, all they do is sorting a list. Well, the difference comes in the way they do things.

Quick Sort: it chooses a pivot, then get all the numbers that are bigger than the pivot to the right of it, and all that are smaller to the left. Then we know that the pivot is in the right place, then it calls QuickSort on each of the other parts until the list is fully sorted. They choosing off the pivot is really important, because it could get your runtime to be much bigger with a bad pivot picked.

Merge Sort: Divides the list in half, sort the halves and merge then together again.

Selection Sort: This one it’s the simplest, you just take item by item in the list and compare it to each other item of the list, to put it in the right place.

Bubble Sort: The idea it’s to go trough the list a bunch of times, and in each time get the biggest element to the end of the list, by comparing adjacent elements and putting them in order. If it goes one whole time without swapping place it knows that the listed is sorted, so it will always need to do one more check.

Here it’s a very interesting way to learn about sorting algorithms, it’s a playlist with 6 short videos: